The Living Dead.

It’s no coincidence that arguments between dualism and physicalism usually occur between the same kinds of people as arguments between secular evolution and intelligent design. And this connection is not because each side contends some overarching philosophy. It’s due to the fact that one side—physicalism—leads to some conclusions that are unbelievable for a spiritual conscious mind.

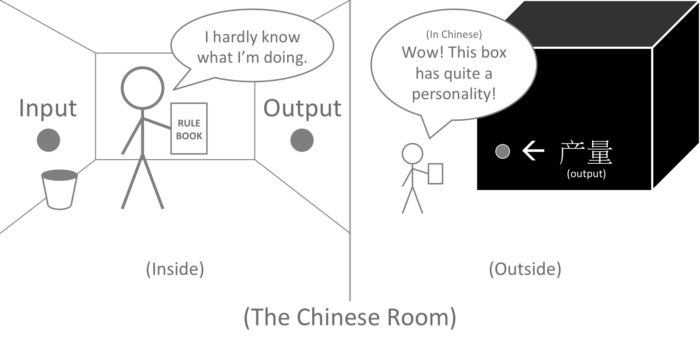

Consider the challenge of artificial intelligence—artificial consciousness in particular. This is the challenge of creating a machine that wholly perceives and experiences the world as people do. Philosophers and Scientists alike have created many tests to see if artificial consciousness is possible, including the Turing Test and the less famous Chinese Room Experiment. Here’s a quick explanation of the Chinese Room:

Notes with Chinese characters come into a room. An English speaker uses their rule book (written in English) to send the right notes through the output. They do not know what the inputs mean and where they come from, what the outputs mean and where they go, or what the rules mean. From the outside, the Chinese Room is just a big black box that you can have conversations with in Chinese. And the Conversations are so meaningful that they seem to pass the Turing Test. The question, then, is whether the big black box is alive and aware. The obvious answer is no, which the person outside will see when they open the box and see the English speaker inside, doing their job.

The Chinese Room reveals the problem that a conscious being experiences the world, as opposed to merely processing and responding to the world through a filter. The thrust of the argument is that, in the same way that a man in a room simply responds to inputs and outputs with no understanding of its meaning, a machine has no understanding of the outside world. Moreover, a machine has no capacity to even understand the world around it, because a machine is merely a series of mechanical processes and electrical circuits. These processes are no more capable of understanding the world than a bunch of rocks in a mudslide.

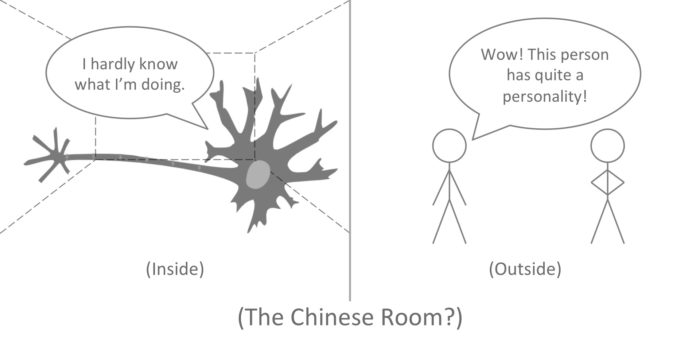

But under the philosophy of physicalism, how can we distinguish man from machine? If all human experience can be reduced to physical processes, it seems like there is little intellectual difference between man and machine. Indeed, neuroscience and cognitive science have been able to tease apart the building blocks of cognition and the physical brain areas responsible for their activity. We know this with such exacting command that we create drugs that can alter emotions, decision-making, and our sense of reality. Wherefore, then, do we continue to have this notion of “life?”

Given all that we know about the mind and biology, we can conclude that our notions of being “alive” are merely an illusion. We can say that experience is merely the chemical process of rendering the world around us; that consciousness is just the chemical process of neurons firing in our head. Indeed, popular academia holds this position. But if we take this position, that all that we know, all that we experience, and all that we feel are just chemical reactions, then why is the Chinese Room argument thoughtful in the first place? After all, under physicalism, it is not merely the case that we have fooled ourselves into thinking that we have spirits or souls, it is that we have fooled ourselves into thinking that we are somehow “specially aware and alive.” Under physicalism, the truth would be that we are no more alive than fire or a river or a brick of lead. Our feelings of self-awareness and free agency would be unequivocally false. Let’s look at the Chinese Room, again, to understand this conclusion.

In the same way that it is absurd to think that the big black Chinese box has a personality, it should be absurd to think some kind of “awareness” can come from the neurons that comprise the brain. If neurons in the brain work like the man in the Chinese Room, then its hard to see how we can say we are alive.

Additionally, with the right algorithms, we could program a computer to process and react to information the same way as the human brain. We could then run an internal systems test and the results could be logically the same as a human brain scan, with all the computer systems “lighting up” as would happen in a brain scan. Yet we would still wonder if the computer actually perceives the world or if it’s just electricity flowing in a circuit, performing the protocols that we built into it. But if we would not be convinced that this computer is alive, then what is our basis for saying we are alive?

The main point of the Chinese room is that none of its parts have any conception of Chinese. It has no meaning to them. Moreover, no part of the Chinese Room (aside from the English speaker) is even capable of appreciating meaning, let alone language. The room is just a room; the rule book is just a book. And even less can be said of neurons, for at least the English speaker in the room can understand that he has a task to perform. Yet somehow, from an arbitrary arrangement of inanimate neurons, we somehow find consciousness in the human brain.

To be clear, even “consciousness” may be an inadequate term. Some people would now like define consciousness in terms of scientifically observable and quantifiable phenomena and trillions of chemical reactions. But this is something more. Call it “feeling,” for now. Somewhere between the organic machinery that makes up the mind and its networks, there’s a part of us that translates this information into feeling, there is a part of us that doesn’t merely take inputs and give outputs, but actually feels the whole process, altogether. Some how, there is a part of us that doesn’t merely generate and process emotion, but feels it, and so on for all our senses.

Imagine all the people you know. As you grow up, you develop an intuition that they have minds and feelings just as you do, without ever really questioning that maybe, just maybe, they actually don’t. What if they did not have minds? What if they did not have feelings? What if they were simply organic machines—philosophical zombies—that passed the Turing Test as well as any AI should. The body could carry on in this state, without feelings and physicalists would be none the wiser. Its brain lights up and responds appropriately under all brain imaging observations. It shows well-adjusted, pro-social behavior and enthusiasm and vitality for its everyday activities. By every metric devised, it would be human and yet not feeling—not truly appreciating—the world around it. It’s just an extremely advanced machine processing the world around it. Now, given all that our scientific observations have told us about the human brain, why isn’t that the case that you, or me, or everybody is merely a philosophical zombie? Why are you here, when as far as science can tell, your body can strive just as well without you?

The problem with physicalism is right in the etymology itself: it reduces the mind strictly to its physical components. But if this is the correct way to understand ourselves, then there is nothing to distinguish the living from the dead, man from machine, because all that physicalism can tell us about life is just the physical aspects of life. And at the end of the day, all those findings amount to elaborate chemical reactions, and that’s not the spark of life. That’s not feeling.

Update:

Someone might want to say that maybe there’s something special about the stuff neurons are made out of, and that this atomic composition is the reason why we have feelings. I’m not familiar with the latest breakthroughs in these fields and it may be the case that science can or will find some kind of property (atomic or otherwise) that explains why we feel. But it is my understanding that physical properties exist on a spectrum. For example, Some elements are more conductive than others. Some elements are more insulating that others. Some elements are more readily vaporized than others, and so on. So if there is some kind of property that explains why we feel, I would expect that property to exist in the stuff of computers, too, just more so or less so. Let me know what you think.

Related reading:

http://en.wikipedia.org/wiki/Symbol_grounding

http://en.wikipedia.org/wiki/Philosophy_of_perception

http://plato.stanford.edu/entries/meaning/

This Cognition/Philosophy post was written by Princeton.

Comments